Chang Liu 刘 畅

Zhongguancun Academy |

|

Biography

I am currently a faculty member at Zhongguancun Academy, and on duty of the AI Theory and Algorithm Division in the AI Core Department [alternative homepage (in Chinese)]. Before that, I was a senior researcher at Microsoft Research AI for Science (科学智能中心) and Microsoft Research Asia, Machine Learning Group. I received my Ph.D. degree (cum laude) from the TSAIL Group at the Department of Computer Science and Technology of Tsinghua University, supervised by Prof. Jun Zhu. I received my B.Sc. degree from the Department of Physics of Tsinghua University. I was a visiting scholar to Prof. Lawrence Carin's group at Duke University from Oct. 2017 to Oct. 2018. My research interest includes sampling methods and theory, generative model formulations and technical designs, learning paradigms beyond data, and AI methods for contemporary science problems.

Research

[Google Scholar] [GitHub] [Semantic Scholar]

("*": corresponding author; "†": equal contribution)

Preprints

-

FlexRibbon: Joint Sequence and Structure Pretraining for Protein Modeling.

Jianwei Zhu†, Yu Shi†, Ran Bi†, Peiran Jin†*, Chang Liu†*, Zhe Zhang†, Haitao Huang, Zekun Guo, Pipi Hu, Fusong Ju, Lin Huang, Xinran Tai, Chenao Li, Kaiyuan Gao, Xinran Wei, Huanhuan Xia, Jia Zhang, Yaosen Min, Zun Wang, Yusong Wang, Liang He, Haiguang Liu*, Tao Qin*. 2025.

[Paper & Appendix] [bioRxiv] -

Diagnosing and Improving Diffusion Models by Estimating the Optimal Loss Value.

Yixian Xu†, Shengjie Luo†, Liwei Wang, Di He*, Chang Liu*. 2025.

[Paper & Appendix] [arXiv] -

NatureLM: Deciphering the Language of Nature for Scientific Discovery.

Yingce Xia†, Peiran Jin†, Shufang Xie†, Liang He†, Chuan Cao†, Renqian Luo†, Guoqing Liu†, Yue Wang†, Zequn Liu†, Yuan-Jyue Chen†, Zekun Guo†, Yeqi Bai, Pan Deng, Yaosen Min, Ziheng Lu, Hongxia Hao, Han Yang, Jielan Li, Chang Liu, Jia Zhang, Jianwei Zhu, Kehan Wu, Wei Zhang, Kaiyuan Gao, Qizhi Pei, Qian Wang, Xixian Liu, Yanting Li, Houtian Zhu, Yeqing Lu, Mingqian Ma, Zun Wang, Tian Xie, Krzysztof Maziarz, Marwin Segler, Zhao Yang, Zilong Chen, Yu Shi, Shuxin Zheng, Lijun Wu, Chen Hu, Peggy Dai, Tie-Yan Liu, Haiguang Liu, Tao Qin. 2025.

[Paper & Appendix] [arXiv] -

MatterSim: A Deep Learning Atomistic Model Across Elements, Temperatures and Pressures.

Han Yang†, Chenxi Hu†, Yichi Zhou†, Xixian Liu†, Yu Shi†, Jielan Li†, Guanzhi Li†, Zekun Chen†, Shuizhou Chen†, Claudio Zeni, Matthew Horton, Robert Pinsler, Andrew Fowler, Daniel Zügner, Tian Xie, Jake Smith, Lixin Sun, Qian Wang, Lingyu Kong, Chang Liu, Hongxia Hao, Ziheng Lu. 2024.

[Paper & Appendix] [arXiv]

Publications

-

CellNavi Predicts Genes Directing Cellular Transitions by Learning a Gene Graph-Enhanced Cell State Manifold.

Tianze Wang†, Yan Pan†, Fusong Ju†, Shuxin Zheng†, Chang Liu†, Yaosen Min, Xinwei Liu, Huanhuan Xia, Guoqing Liu, Haiguang Liu, Pan Deng*. Nature Cell Biology, 2025.

[Paper] [Appendix] [bioRxiv] -

Potential Score Matching: Debiasing Molecular Structure Sampling with Potential Energy Guidance.

Liya Guo, Zun Wang, Chang Liu*, Junzhe Li, Pipi Hu*, Yi Zhu*, Tao Qin. Transactions on Machine Learning Research (TMLR), 2025.

[Paper & Appendix] [arXiv] -

E2Former: An Efficient and Equivariant Transformer with Linear-Scaling Tensor Products.

Yunyang Li, Lin Huang, Zhihao Ding, Xinran Wei, Chu Wang, Han Yang, Zun Wang, Chang Liu, Yu Shi, Peiran Jin, Tao Qin, Mark Gerstein, Jia Zhang. Neural Information Processing Systems (NeurIPS; Spotlight), 2025.

[Paper & Appendix] [arXiv] -

Efficient and Scalable Density Functional Theory Hamiltonian Prediction through Adaptive Sparsity.

Erpai Luo†, Xinran Wei†, Lin Huang, Yunyang Li, Han Yang, Zaishuo Xia, Zun Wang, Chang Liu, Bin Shao, Jia Zhang. International Conference on Machine Learning (ICML), 2025.

[Paper & Appendix] [arXiv] -

Enhancing the Scalability and Applicability of Kohn-Sham Hamiltonians for Molecular Systems.

Yunyang Li†, Zaishuo Xia†, Lin Huang†, Xinran Wei, Han Yang, Sam Harshe, Zun Wang, Chang Liu, Jia Zhang, Bin Shao, Mark B. Gerstein. International Conference on Learning Representations (ICLR; Spotlight), 2025.

[Paper & Appendix] [arXiv] -

Physical Consistency Bridges Heterogeneous Data in Molecular Multi-Task Learning.

Yuxuan Ren†, Dihan Zheng†, Chang Liu*, Peiran Jin, Yu Shi, Lin Huang, Jiyan He, Shengjie Luo, Tao Qin, Tie-Yan Liu. Neural Information Processing Systems (NeurIPS), 2024.

[Paper & Appendix] [arXiv] [Slides] [Poster] -

Infusing Self-Consistency into Density Functional Theory Hamiltonian Prediction via Deep Equilibrium Models.

Zun Wang, Chang Liu, Nianlong Zou, He Zhang, Xinran Wei, Lin Huang, Lijun Wu, Bin Shao. Neural Information Processing Systems (NeurIPS), 2024.

[Paper & Appendix] [arXiv] -

Self-Consistency Training for Density-Functional-Theory Hamiltonian Prediction.

He Zhang, Chang Liu*, Zun Wang, Xinran Wei, Siyuan Liu, Nanning Zheng*, Bin Shao, Tie-Yan Liu. International Conference on Machine Learning (ICML), 2024.

[Paper & Appendix] [arXiv] [Slides] [Poster] -

Predicting Equilibrium Distributions for Molecular Systems with Deep Learning.

Shuxin Zheng†*, Jiyan He†, Chang Liu†*, Yu Shi†, Ziheng Lu†, Weitao Feng, Fusong Ju, Jiaxi Wang, Jianwei Zhu, Yaosen Min, He Zhang, Shidi Tang, Hongxia Hao, Peiran Jin, Chi Chen, Frank Noé, Haiguang Liu†*, Tie-Yan Liu*. Nature Machine Intelligence, 2024.

[Paper] [Supplementary Information] [arXiv] [Project Homepage] [Slides] [BibTeX] -

Overcoming the Barrier of Orbital-Free Density Functional Theory for Molecular Systems Using Deep Learning.

He Zhang†, Siyuan Liu†, Jiacheng You, Chang Liu*, Shuxin Zheng*, Ziheng Lu, Tong Wang, Nanning Zheng, Bin Shao*. Nature Computational Science, 2024.

[Full-text access] [arXiv] [Code (inference)] [Model checkpoints & evaluation data] [Slides] [Podcast] [BibTeX] -

Invertible Rescaling Network and Its Extensions.

Mingqing Xiao, Shuxin Zheng, Chang Liu*, Zhouchen Lin*, Tie-Yan Liu. International Journal of Computer Vision, 2023.

[Full-text access] [arXiv] [Code] [BibTeX] -

Geometry in sampling methods: A review on manifold MCMC and particle-based variational inference methods.

Chang Liu, Jun Zhu. Advancements in Bayesian Methods and Implementation, in Handbook of Statistics (vol. 47), Elsevier, 2022.

[Full-text paper] [BibTeX] -

Direct Molecular Conformation Generation.

Jinhua Zhu, Yingce Xia*, Chang Liu*, Lijun Wu, Shufang Xie, Yusong Wang, Tong Wang, Tao Qin, Wengang Zhou, Houqiang Li, Haiguang Liu, Tie-Yan Liu. Transactions on Machine Learning Research (TMLR), 2022.

[Paper & Appendix] [arXiv] [Code] [BibTeX] -

Rapid Inference of Nitrogen Oxide Emissions Based on a Top-Down Method with a Physically Informed Variational Autoencoder.

Jia Xing, Siwei Li, Shuxin Zheng, Chang Liu, Xiaochun Wang, Lin Huang, Ge Song, Yihan He, Shuxiao Wang, Shovan Kumar Sahu, Jia Zhang, Jiang Bian, Yun Zhu, Tie-Yan Liu, Jiming Hao. Environmental Science & Technology, 2022. [BibTeX] -

Sampling with Mirrored Stein Operators.

Jiaxin Shi, Chang Liu, Lester Mackey. International Conference on Learning Representations (ICLR; Spotlight), 2022.

[Paper & Appendix] [arXiv] [Code] [Slides] [BibTeX] -

PriorGrad: Improving Conditional Denoising Diffusion Models with Data-Dependent Adaptive Prior.

Sang-gil Lee, Heeseung Kim, Chaehun Shin, Xu Tan, Chang Liu, Qi Meng, Tao Qin, Wei Chen, Sungroh Yoon, Tie-Yan Liu. International Conference on Learning Representations (ICLR), 2022.

[Paper & Appendix] [arXiv] [Code: vocoder, acoustic model] [BibTeX] -

On the Generative Utility of Cyclic Conditionals.

Chang Liu*, Haoyue Tang, Tao Qin, Jintao Wang, Tie-Yan Liu. Neural Information Processing Systems (NeurIPS), 2021.

[Paper & Appendix] [arXiv] [Code] [Slides] [Poster] [Video] [Media (Chinese)] [BibTeX] -

Learning Causal Semantic Representation for Out-of-Distribution Prediction.

Chang Liu*, Xinwei Sun, Jindong Wang, Haoyue Tang, Tao Li, Tao Qin, Wei Chen, Tie-Yan Liu. Neural Information Processing Systems (NeurIPS), 2021.

[Paper & Appendix] [arXiv] [Code] [Slides] [Poster] [Video] [Media (Chinese)] [BibTeX] -

Recovering Latent Causal Factor for Generalization to Distributional Shifts.

(Formerly named "Latent Causal Invariant Model")

Xinwei Sun, Botong Wu, Xiangyu Zheng, Chang Liu, Wei Chen, Tao Qin, Tie-yan Liu. Neural Information Processing Systems (NeurIPS), 2021.

[Paper] [Appendix] [arXiv] [Code] [Slides] [Poster] [Media (Chinese)] [BibTeX] -

Object-Aware Regularization for Addressing Causal Confusion in Imitation Learning.

Jongjin Park†, Younggyo Seo†, Chang Liu, Li Zhao, Tao Qin, Jinwoo Shin, Tie-Yan Liu. Neural Information Processing Systems (NeurIPS), 2021.

[Paper & Appendix] [arXiv] [Code] [Slides] [Poster] [Media (Chinese)] [BibTeX] -

Generalizing to Unseen Domains: A Survey on Domain Generalization.

Jindong Wang, Cuiling Lan, Chang Liu, Yidong Ouyang, Wenjun Zeng, Tao Qin. International Joint Conference on Artificial Intelligence (IJCAI; survey track), 2021.

New long version published at IEEE Transactions on Knowledge and Data Engineering (TKDE), 2022.

[Paper] [arXiv] [BibTeX] -

Invertible Image Rescaling.

Mingqing Xiao, Shuxin Zheng*, Chang Liu*, Yaolong Wang, Di He, Guolin Ke, Jiang Bian, Zhouchen Lin, Tie-Yan Liu. European Conference on Computer Vision (ECCV; Oral), 2020.

[Paper & Appendix] [arXiv] [Code] [BibTeX] -

Variance Reduction and Quasi-Newton for Particle-Based Variational Inference.

Michael H. Zhu*, Chang Liu*, Jun Zhu*. International Conference on Machine Learning (ICML), 2020.

[Paper] [Appendix] [Code] [Slides] [Video] [BibTeX] -

Understanding MCMC Dynamics as Flows on the Wasserstein Space.

Chang Liu, Jingwei Zhuo, Jun Zhu. International Conference on Machine Learning (ICML), 2019.

[Paper & Appendix] [arXiv] [Code] [Slides] [Poster] [BibTeX] -

Understanding and Accelerating Particle-Based Variational Inference.

Chang Liu, Jingwei Zhuo, Pengyu Cheng, Ruiyi Zhang, Jun Zhu, Lawrence Carin. International Conference on Machine Learning (ICML), 2019.

[Paper & Appendix] [arXiv] [Code] [Slides] [Poster] [BibTeX] -

Variational Annealing of GANs: A Langevin Perspective.

Chenyang Tao, Shuyang Dai, Liqun Chen, Ke Bai, Junya Chen, Chang Liu, Ruiyi Zhang, Georgiy Bobashev, Lawrence Carin. International Conference on Machine Learning (ICML), 2019.

[Paper] [Appendix] [BibTeX] -

Straight-Through Estimator as Projected Wasserstein Gradient Flow.

Pengyu Cheng, Chang Liu, Chunyuan Li, Dinghan Shen, Ricardo Henao, Lawrence Carin. NeurIPS 2018 Bayesian Deep Learning Workshop, 2018.

[Paper] [arXiv] [BibTeX] -

Message Passing Stein Variational Gradient Descent.

Jingwei Zhuo, Chang Liu, Jiaxin Shi, Jun Zhu, Ning Chen, Bo Zhang. International Conference on Machine Learning (ICML), 2018.

[Paper] [Appendix] [arXiv] [BibTeX] -

Riemannian Stein Variational Gradient Descent for Bayesian Inference.

Chang Liu, Jun Zhu. AAAI Conference on Artificial Intelligence (AAAI), 2018.

[Paper] [Appendix] [arXiv] [Code] [Slides] [Poster] [BibTeX] -

Stochastic Gradient Geodesic MCMC Methods.

Chang Liu, Jun Zhu, Yang Song. Neural Information Processing Systems (NeurIPS), 2016.

[Paper] [Appendix] [Code] [Slides] [Poster] [BibTeX]

Thesis

-

Doctoral Dissertation: A Study on Efficient Bayesian Inference Methods Using Manifold Structures (in Chinese),

Outstanding Doctoral Dissertation Award of Tsinghua University, supervised by Prof. Jun Zhu, 2019.

- Related talk (in English): Sampling Methods on Manifolds and Their View from Probability Manifolds. (Updated Mar. 2022)

-

Related review paper (in English):

Geometry in sampling methods: A review on manifold MCMC and particle-based variational inference methods

(free access link before Nov. 12).

Handbook of Statistics (vol. 46), Elsevier, 2022.

[Full-text paper] [BibTeX]

- Bachelor Thesis: Maximum Entropy Discrimination Latent Dirichlet Allocation with Determinantal Point Process Prior (in Chinese), supervised by Prof. Jun Zhu, 2014.

Technical Notes and Reports

- A Verbose Note on Density Functional Theory. Chang Liu. 2024.

- Benchmarking Graphormer on Large-Scale Molecular Modeling Datasets. Yu Shi, Shuxin Zheng, Guolin Ke, Yifei Shen, Jiacheng You, Jiyan He, Shengjie Luo, Chang Liu, Di He, Tie-Yan Liu. 2022.

Talk & Teaching Materials

- Technical Introduction to Diffusion Models (Dec. 2022).

-

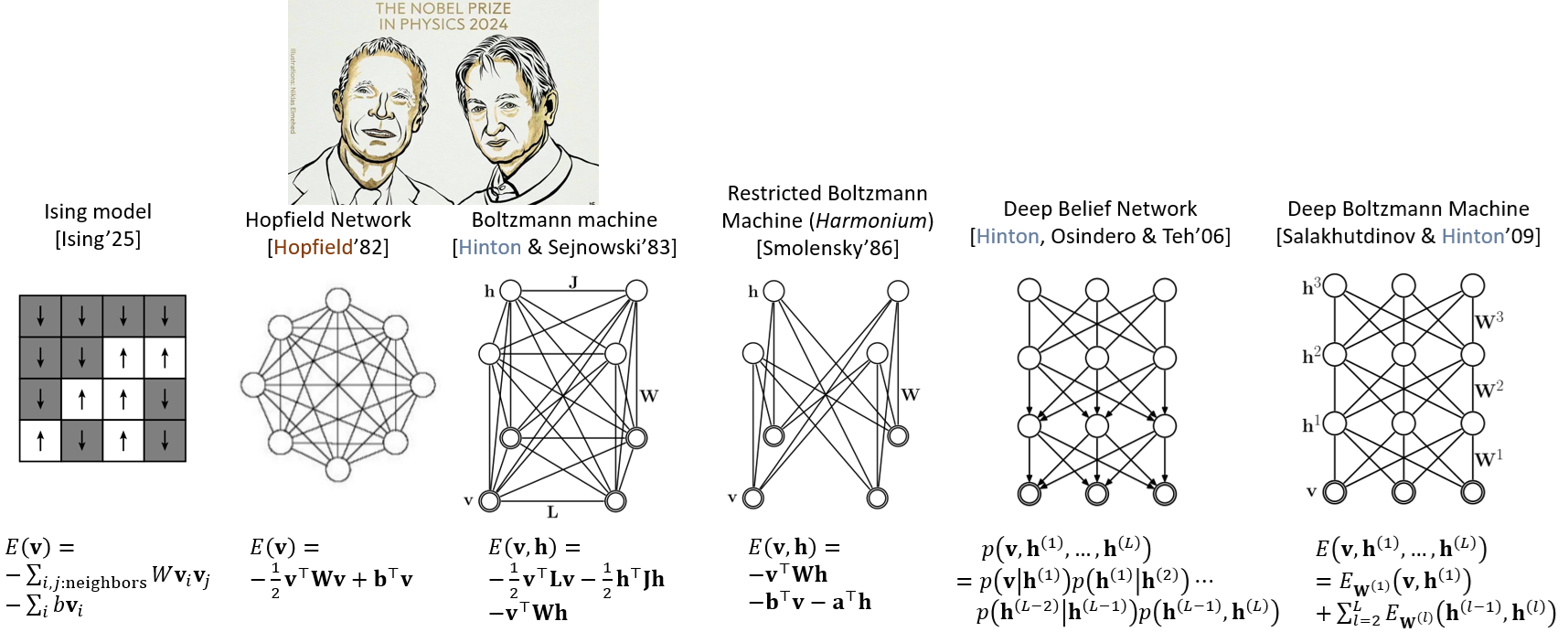

Generative Model [2019][2022][2023][2024].

- Causality Basics (based on Peters et al., 2017, "Elements of Causal Inference").

- Bayesian Learning: Basics and Advances. (Updated Aug. 27, 2021)

- Sampling Methods on Manifolds and Their View from Probability Manifolds. (Updated Mar. 2022)

- Gradient Flows.

- Statistical-Mechanics-Related Concepts in Machine Learning.

- Dynamics-Based MCMC Methods.

- Hamiltonian Monte Carlo on Riemannian Manifolds.

- Introduction to MCMC and Hamiltonian Monte Carlo.

Academic Service

- Conference Area Chair: NeurIPS (2022-), ICML (2023-), ICLR (2023-).

- Action Editor: Transactions on Machine Learning Research (TMLR) (2025-).

- Conference Reviewer: NeurIPS (2016,2018-2021), ICML (2019-2022), ICLR (2021-2022), AAAI (2020-2021), UAI (2019), IJCAI (2021), AISTATS (2023). ICML 2020 top-33% reviewer.

- Journal Reviewer: Nature Machine Intelligence (2025), IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) (2020-2025), IEEE Transactions on Intelligent Systems and Technology (TIST) (2019), IEEE Transactions on the Web (2019).

Teaching

- Summer School on AI+Physics, undergraduate course, School of Physics and Astronomy, Beijing Normal University. 2025 summer.

- Advanced Machine Learning (generative model section), graduate course, Institute of Computing Technology, Chinese Academy of Sciences. 2023 spring, 2024 spring.

- Summer School on Deep Generative Models, graduate course, Department of Mathematical Sciences, Tsinghua University. Jun. 2022.

- Advanced Machine Learning (generative model section), graduate course, Department of Electronic Engineering, Tsinghua University. 2019 fall, 2021 spring, 2022 spring.

- Teaching Assistant, Duke-Tsinghua Machine Learning Summer School. Aug. 2016.

- Teaching Assistant, Advanced Calculus (undergraduate course, hosting exercise classes), instructed by Prof. Yinghua Ai, Department of Mathematical Sciences, Tsinghua University. Sep. 2014 - Jan. 2015.

Honors & Awards

- Outstanding Ph.D. Graduate, Department of Computer Science and Technology, Tsinghua University, 2019.

- Outstanding Doctoral Dissertation, Tsinghua University, 2019.